Testing and Validation

Introduction

“It costs almost five times more to fix a coding defect once the system is released than it does to fix it in unit testing.”

-- Paul Grossman: Automated Testing ROI

Software testing is the process of ensuring that the software meets the requirements and works as expected. It is an essential part of the software development process. Testing helps to identify defects early in the development process, which reduced their impact on customers and the cost of fixing them.

We would love to be able to write tests that prove our software is free from defects, but that is not possible. Just because our tests pass does not mean that our software is bug-free.

"Testing can demonstrate the presence of bugs, but not their absence."

-- Edsger W. Dijkstra

History of Software Testing

Software testing has been around since the early days of computing, but it has evolved significantly over the years. In the early days of computing, the days of punch cards and mainframes, testing was done manually as part of the development process. Without modern compilers and IDEs to help catch errors, the developers had to run their code against expected inputs and outputs to ensure that it worked as intended.

After the advent of modern programming languages, the testing efforts were shifted from the developers to dedicated testers. This led to the development of formal testing methodologies and techniques, such as black-box testing, white-box testing, and regression testing. Dedicated QA and QE teams were formed whose sole purpose was validation and verification of the software. It was common for more testers to be employed than developers.

In the early 2000s, with the rise of Agile development, the testing efforts started to shift back to the developers. Testing teams were scaled back and developers were expected to write automated tests to ensure that their code worked as intended. This led to the development of test-driven development (TDD) and behavior-driven development (BDD) methodologies, where tests are written before the code is written.

Today, the vast majority of testing is automated. Automated testing tools and frameworks have been developed to help teams to write and run tests quickly and efficiently. Continuous integration and continuous deployment (CI/CD) pipelines have been developed to automate the testing and deployment process, ensuring that the software goes through a consistent and repeatable testing process before it is released to customers. This reduces the time commitment required for testing and ensures that the software is tested thoroughly.

There are still some industries where manual testing is the main form of testing, but the trend is towards automated testing. The main example is the gaming industry. Games have a heavy emphasis on user experience and visual appeal, which makes them difficult to test programmatically. They also tend to have a shorter lifespan than other software, which makes maintainability less of a concern.

Why the Shift to Automated Testing?

Automated testing has several advantages over manual testing:

- Speed: Automated tests can be run quickly and efficiently, allowing developers to test their code more frequently and catch defects earlier in the development process. You don't need to wait for a human tester to check if your code works.

- Consistency: Automated tests are consistent and repeatable, ensuring that the software behaves the same way every time it is tested. This makes it easier to identify and fix defects. Using manual labor for regression testing is not only slow but also error-prone.

- Coverage: Automated tests can cover a wide range of scenarios and edge cases, ensuring that the software meets the requirements and works as expected. Manually testing each edge case is often impossible.

- Cost: Automated tests are cheaper to run than manual tests. They can be run quickly and efficiently without the need for human testers. This reduces the cost of testing and ensures that the software is tested thoroughly.

With this shift towards automated testing, there has been a matching drop in the number of dedicated testers. The team at Google that created Google Earth had a ratio of 20 developers to 1 tester. This is a stark contrast to the early days of computing, where there were more testers than developers.

Types of Automated Test

There are several types of automated tests that can be used to test software. Each type of test has its own purpose and scope, and can be used to test different aspects of the software. The three types that I use most often are:

- Unit Tests: Tests individual units or components of the software, such as functions or classes.

- Integration Tests: Tests how different units or components of the software work together.

- Acceptance Tests: Tests the software against the requirements and user stories.

The unit tests are the fastest to run, but they also only provide confidence that a narrow slice of the software works as expected. Integration tests are slower to run, but they provide a higher level of confidence that the software works as expected. Acceptance tests are the slowest to run and most difficult to write, but they provide the highest level of confidence that the software meets the requirements.

This trade-off between speed and confidence is a common theme in software testing. The faster the test, the less confidence it usually provides. The slower the test, the more confidence it provides. The goal is to build a test suite that is thorough enough to catch defects, but fast enough to run frequently.

The definitions of these test types can vary depending on the context and organization. For example, some people only consider a test an integration test if it relies on external resources, such as a database or network connection. Others consider any test that tests how multiple units or components work together to be an integration test, regardless of whether it relies on external resources. Some groups also split unit tests into two types: true unit tests and component tests. They might also split acceptance tests into two types: functional tests and end-to-end tests.

I find these subdivisions to do more harm than good. They can lead to confusion and disagreement about what should be tested at each level, which just takes time away from writing tests.

Use the definitions that make the most sense for you and your organization, but be aware that there are other definitions out there.

There are also many specialized types of tests, such as performance tests, load tests, and security tests, that can be used to test specific aspects of the software. These tests are usually run in addition to the basic unit, integration, and acceptance tests. Their goal is not to validate the functional requirements of the software, but to validate the non-functional requirements, such as performance, scalability, and security.

Testing Pyramid

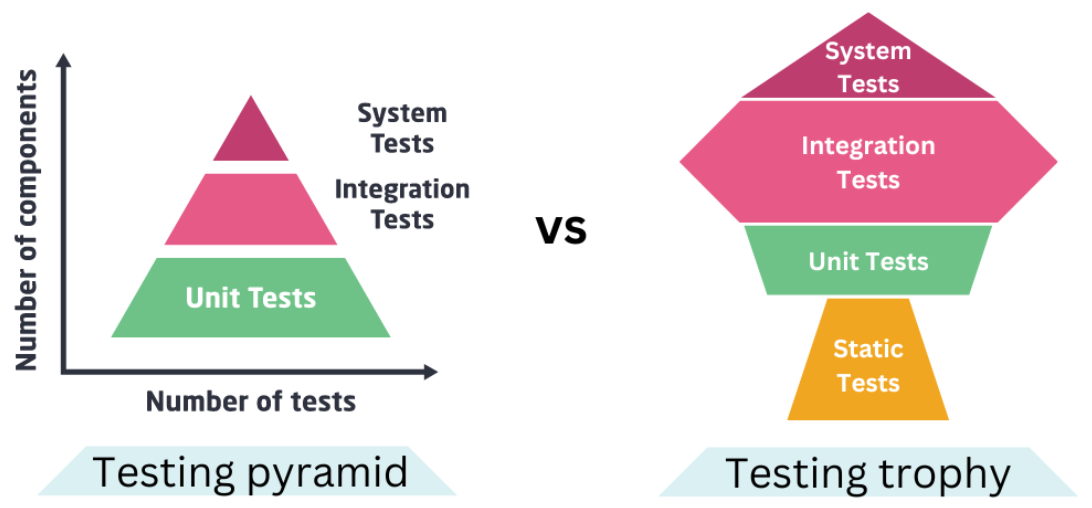

There is a lot of debate about how much time should be spent on each type of test. The testing pyramid is a popular model that suggests that most of the testing effort should be focused on unit tests, with fewer tests at the integration level, and even fewer tests at the acceptance level. This is because unit tests are fast and cheap to run, while acceptance tests are slower and more expensive to run.

An alternative model is the testing trophy, proposed by Kent Dodds, which suggests that more testing effort should be spent on integration tests, as they are more likely to catch defects than unit tests. This model is based on the idea that defects are more likely to occur at the boundaries between units or components, rather than within the units themselves.

These two models for test distribution were proposed by extremely respected figures in the software testing community, so why the discrepancy?

The real explanation is that they don't actually contradict each other. They are simply using different definitions of what constitutes a unit test, an integration test, and a system test. If you read Kent Dodds article on the testing trophy, you'll see that he is really arguing against local mocks and other types of test doubles, not against unit tests. He is arguing that you should test your code in a way that is more representative of how it will be used in production. This is a good argument, but it doesn't mean that you should stop writing unit tests.

The testing pyramid is defining a Unit test as any test that runs quickly and doesn't rely on external resources. It's definition of Integration test assumes that the test runs more slowly and is more difficult to build.

For the testing trophy, the definition of a Unit test is more narrow. It defines the test on one that tests a single unit of code in isolation, mocking any other units of code that it interacts with. The definition of an Integration test is actually broader. It includes tests that mock external resources, and validate how multiple units of code work together.

The testing trophy and testing pyramid are both valid models, but they are based on different definitions of what constitutes a unit test and an integration test. Whether you align more with the testing pyramid or the testing trophy depends mostly on your definitions of these terms.

Balance of Test Types

Forcing developers to write a specific number of tests at each level does not work well in practice. The best test suite is one that is balanced, with a mix of unit, integration, and acceptance tests. The exact mix will depend on the software being tested, the requirements, and the team's testing expertise.

The best choice is to spend more time testing your system in ways that you can run frequently and can be reliably and consistently controlled. Spend most of your time on this sort of test, since you can run more of them more often. Any test that relies on external resources, such as a database, network, or file system, is going to be slower and less reliable than a test that doesn't rely on external resources. Since those test are slower and less reliable, you should have fewer of them.

Use the testing pyramid and testing trophy as guidelines, not as strict rules. The goal is to have a balanced test suite that provides confidence that the software meets the requirements and works as expected.

Choosing Test Cases

Deciding what to test and what not to test can be challenging. You want to test as much of the software as possible, but you also want to avoid wasting time testing things that don't need to be tested. You are never going to be able to test everything, so you need to prioritize your testing efforts.

Objectives

No matter what kind of test you are writing or what kind of software you are testing, you should strive to write tests that:

- Clearly express what they are testing.

- Are easy to read and understand. Good tests should act as documentation for your code.

- Establish clear boundaries about what is being tested and what is not being tested.

- Run quickly and reliably.

- Fail for useful reasons.

Cases to Consider

When deciding what to test, you should consider the following cases:

- Common Cases: Test the software against the most common inputs and outputs. Often called "happy path" testing. These are the inputs that are most likely to be seen in practice.

- Simple Cases: Test the software against simple inputs and outputs, such as empty strings or null values. Focus on the simplest possible cases first.

- Boundary Cases: Test the software against the boundaries of the input space, such as the minimum and maximum values. Try to force results that are too large or too small.

- Error Cases: You should have test that generate all possible errors that can occur.

In order to craft a good test suite, you need to clearly understand the requirements of the software. You should have a clear understanding of what the software is supposed to do, and what it is not supposed to do. The finished test suite will provide you confidence that you've built the right thing, and that it works as expected.

In the future, when you or someone else needs to make changes to the software, the test suite will act as documentation for the code and provide a safety net to catch any regressions that might be introduced.

Measuring Test Quality

"I expect a high level of coverage. Sometimes managers require one. There's a subtle difference.

-- Brian Marick

The most common way to measure test quality is through some sort of coverage metric. The most common is line coverage, which measures the percentage of lines of code that are executed during the tests. There are also branch coverage, which measures the percentage of branches that are executed during the tests, and complexity coverage, which measures the percentage of paths through the code that are executed during the tests.

These metrics have some advantages. They are easy to get since they are automatically generated by the testing tools. They are also easy to understand. However, they are not perfect. They can't tell you if your tests are actually testing the right things or if your implementation meets the requirements. They can only tell you if your tests are exercising the code.

Relying on coverage metrics alone can lead to a false sense of security. You can have 100% line coverage and still have bugs in your code. Concentrate on writing tests that are meaningful and that test the right things, rather than just trying to increase your coverage metrics.

While mandating a specific test coverage is reasonable idea, rewarding developers for high test coverage is a terrible idea. Your developers will quickly learn how to game the system. The target metrics will be met, but the tests will be useless. This has been tried many times. In one instance, a follow up study found that more than 25% of the tests didn't include any assertion statements. They were just there to increase the coverage metric.

Be careful what you choose to measure. You might get it.

Quality Test Basics

Every good quality test will contain three steps:

- Arrange: Set up the test by creating the necessary objects and initializing the necessary variables.

- Act: Perform the action that you want to test.

- Assert: Check that the action produced the expected result.

Your goal should be to test that the code does what it is supposed to do, not that it does what you think it should do. The tests should not be validating your implementation itself, they should be validating that your implementation meets the requirements.

I've had several junior developers tell me that they are just bad at writing tests. As long as they've learned the basics of the testing framework, this is never true. They are not bad at writing tests. They are bad at writing code that is testable. If you find that you are struggling to write tests, it is likely that your code is not well-structured. You should refactor your code to make it more testable.

They aren't bad at writing tests. They are bad at design.

Maintainable Tests

The best tests are isolated from the implementation details of the code they are testing. They should test the public interface of the code, not the implementation details. This makes the tests more maintainable, as they are less likely to break when the implementation changes.

We wants tests that give us more freedom to refactor our code. Not ones that just need to be rewritten every time we change our code. Never test private methods directly. They are an implementation detail. Instead, test them through the public interface for your module.

Conclusion

Automated tests are the best way to ensure that your software meets the requirements and works as expected. They are fast, consistent, and repeatable, and can be run quickly and efficiently without the need for human testers. They are an essential part of the software development process, and should be used to test the software at all levels, from unit tests to acceptance tests.

When writing tests, you should consider both positive and negative test cases, and test the software against common, simple, boundary, and error cases. You should strive to write tests that are easy to read and understand, and that clearly express what they are testing. You should also measure the quality of your tests using coverage metrics, such as line coverage, branch coverage, and complexity coverage.

Finally, you should strive to write tests that are maintainable and that are isolated from the implementation details of the code they are testing. This will make the tests more robust and less likely to break when the implementation changes.

🗃️ Unit Testing

2 items

📄️ Integration Tests

Integration tests allow you to test the boundaries between components in your application.

📄️ Acceptance Tests

Introduction

📄️ Specialty Tests

Introduction